MCP Prompts: What They Are and How to Use Them

MCP Prompts: What They Are and How to Use Them

MCP, short for Model Context Protocol, standardizes how AI assistants and LLMs integrate with external data sources and tools. This standardization makes connecting AI agents to various datasets and tools easier and simpler, eliminating the need for custom integrations. Because of this huge benefit, MCP is being adopted widely in the AI community. Among the key concepts of MCP are sampling, roots, resources, tools, and prompts. In this article, we’ll provide a thorough understanding of MCP prompts, discussing how they work and how to use them.

Using MCP prompts involves client-server interaction, so let’s first get a quick overview of the MCP architecture and client-server connections.

How Client-Server Connections Work in MCP

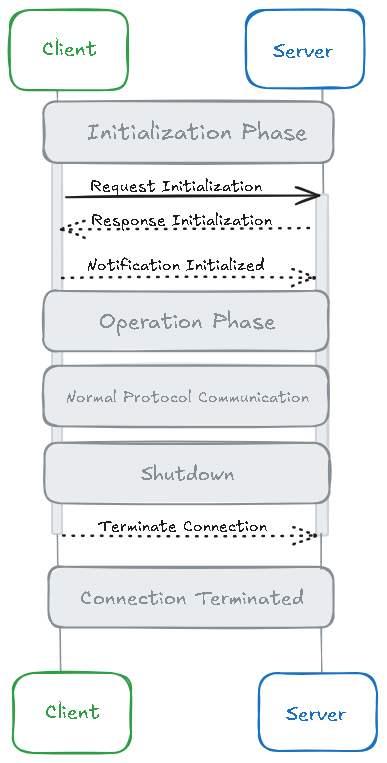

In MCP, the client-server connections lifecycle has three main phases: Initialization, Operation, and Shutdown. The initialization phase is where the client-server interaction starts. Here, the client and the server exchange capabilities and available features and share implementation details. The operation phase is where the normal protocol operations occur. Here, the client can access resources, call tools, and request prompts.

Once the interaction between the client and server is complete, the server initiates a request to terminate the connection.

The figure below shows the complete client-server connections lifecycle in MCP:

What are Prompts in MCP?

If you’ve ever used an LLM like ChatGPT or Claude, you have sent prompts to the model. A prompt is basically the input text we provide to the AI model, based on which the model provides a response. In MCP, prompts are reusable, structured templates containing texts or messages that the server exposes to the client.

The client can provide these templates to the LLM, guiding its behavior. MCP prompts essentially standardize interactions with LLMs. We can define prompt templates (with the most useful instructions) in an MCP server to instruct the AI model or LLM to perform specific tasks. The benefit of MCP prompts is that they are reusable and ensure consistency in the model’s behavior or responses across different sessions and users.

How MCP Prompts Work?

Prompts are intended to be user-controlled. They can be presented directly within the UI of the app for easy access and usage. For example, when a user types a forward slash (/), the app can show a list of available commands or actions. They can also be presented as quick actions and context menu items.

In MCP, each prompt has a name, which is a unique identifier, a human-readable description, and an optional list of arguments. Here is an example, where we've defined a prompt named summarize-document that is designed to help summarize the content of the provided document:

{

"name": "summarize-document",

"description": "Summarize the content of a document provided via a URI.",

"arguments": [

{

"name": "document_uri",

"description": "The URI of the document to be summarized.",

"required": true

},

{

"name": "summary_length",

"description": "Desired length of the summary (e.g., short, medium, long).",

"required": false

}

]

}

The client can request available prompts through the prompts/list. The client can then make a prompts/get request to use a prompt:

{

"method": "prompts/get",

"params": {

"name": "summarize-document",

"arguments": {

"document_uri": "https://example.com/reports/quarterly_report.pdf",

"summary_length": "short"

}

}

}

The server then responds with the prompt template:

{

"description": "Summarize the content of a document provided via a URI.",

"messages": [

{

"role": "user",

"content": {

"type": "text",

"text": "Please summarize the following document in a short format:\n\n[Document Content from https://example.com/reports/quarterly_report.pdf]"

}

}

]

}

What are Dynamic Prompts?

Prompts can also be dynamic and adjust responses based on user input and context. They can be customized with dynamic arguments, such as dates, file names, and user inputs, or use context from different resources, such as documents or logs. They can also chain multiple interactions to form complex workflows.

Here is an example:

{

"name": "generate-email-response",

"description": "Generate a personalized email reply based on the provided customer email content.",

"arguments": [

{

"name": "customer_email",

"description": "The content of the customer's email.",

"required": true

},

{

"name": "customer_name",

"description": "The name of the customer.",

"required": false

}

]

}

How are MCP Prompts Useful?

MCP enables servers to create reusable prompt templates for LLM interactions. They help provide structured instructions to the model on how to approach specific tasks, ensuring consistent and predictable LLM behavior. This leads to more accurate and contextually relevant responses.

And since prompts are user-controlled, they basically enable users to control AI actions or behavior. For example, we can create prompts for reviewing code blocks focusing on security or for generating a customized email in a specific tone for a particular customer.

Here are some example prompt use cases:

-

Context-Aware AI Assistants: By providing useful prompts to the model, we can make AI assistants more intelligent and enable them to provide more context-aware responses.

-

Coding Assistants: Developers can use MCP prompts to instruct AI models to analyze code, suggest improvements, or automate repetitive tasks. This helps improve code quality and enhances developers' productivity.

-

Automated Workflows: By using MCP prompts, we can create automated workflows. Through prompts, we can instruct LLMs to perform specific tasks in a consistent manner.

Implementing MCP Prompts

Here we’ll create a TypeScript-based MCP server and define a static prompt to provide a fun fact about space.

import { Server } from "@modelcontextprotocol/sdk/server/index.js";

import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";

import {

ListPromptsRequestSchema,

GetPromptRequestSchema,

GetPromptRequest

} from "@modelcontextprotocol/sdk/types.js";

// Define a type for prompt names

type PromptName = "space-fact";

// Define the prompt structure

interface Prompt {

name: string;

description: string;

arguments: { name: string; description: string; required: boolean }[];

}

// Define your prompt

const PROMPTS: Record<PromptName, Prompt> = {

"space-fact": {

name: "space-fact",

description: "Generate a fun fact about space",

arguments: []

}

};

// Create the server

const server = new Server(

{

name: "space-fact-prompts-server",

version: "1.0.0"

},

{

capabilities: {

prompts: {}

}

}

);

// Register handler to list prompts

server.setRequestHandler(ListPromptsRequestSchema, async () => {

return {

prompts: Object.values(PROMPTS)

};

});

// Register handler to get a specific prompt

server.setRequestHandler(GetPromptRequestSchema, async (request: GetPromptRequest) => {

const promptName = request.params.name as PromptName;

const prompt = PROMPTS[promptName];

if (!prompt) {

throw new Error(`Prompt not found: ${promptName}`);

}

if (promptName === "space-fact") {

return {

messages: [

{

role: "user",

content: {

type: "text",

text: "Tell me a fun fact about space."

}

}

]

};

}

throw new Error("Prompt implementation not found");

});

// Start the server using stdio transport

const transport = new StdioServerTransport();

await server.connect(transport);

// Optional: Log to stderr to confirm startup (visible in Claude logs)

console.info('{"jsonrpc": "2.0", "method": "log", "params": { "message": "Server running and connected via stdio" }}');

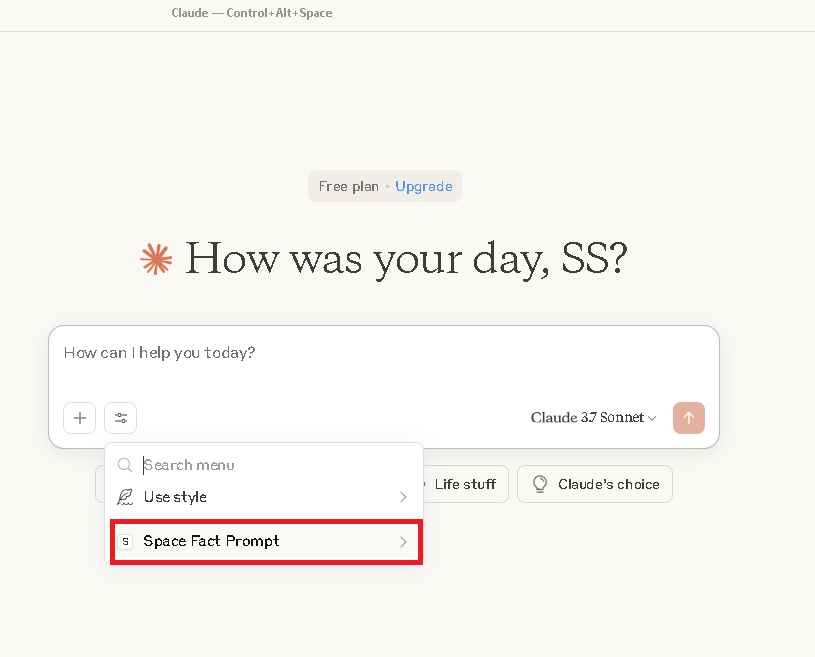

Here's how you can use a prompt in Claude Desktop:

First, add your server configuration in your Claude claude_desktop_config and then restart Claude. Claude will now show you the option to add your prompt:

When implementing prompts, make sure to use clear prompt names and provide proper descriptions for prompts and arguments. Also, remember to fix missing arguments, if any. Implementing proper error handling is also essential. You can also use multiple inputs to test prompts.

Which MCP Clients Support Prompts?

The table below shows different MCP clients and whether they support prompts:

| MCP Clients | Support for Prompts |

|---|---|

| 5ire | No |

| AgentAI | No |

| AgenticFlow | Yes |

| Amazon Q CLI | Yes |

| Apify MCP Tester | No |

| BeeAI Framework | No |

| BoltAI | No |

| Claude.ai | Yes |

| Claude Code | Yes |

| Claude Desktop App | Yes |

| Cline | No |

| Continue | Yes |

| Copilot-MCP | No |

| Cursor | No |

| Daydreams Agents | Yes |

| Emacs Mcp | No |

| fast-agent | Yes |

| FLUJO | No |

| Genkit | Yes |

| Glama | Yes |

| GenAIScript | No |

| Goose | No |

| gptme | No |

| HyperAgent | No |

| Klavis AI Slack/Discord/ | No |

| LibreChat | No |

| Lutra | Yes |

| mcp-agent | No |

| mcp-use | Yes |

| MCPHub | Yes |

| MCPOmni-Connect | Yes |

| Microsoft Copilot Studio | No |

| MindPal | No |

| Msty Studio | No |

| OpenSumi | No |

| oterm | Yes |

| Postman | Yes |

| Roo Code | No |

| Slack MCP Client | No |

| Sourcegraph Cody | No |

| SpinAI | No |

| Superinterface | No |

| TheiaAI/TheiaIDE | No |

| Tome | No |

| TypingMind App | No |

| VS Code GitHub Copilot | No |

| Windsurf Editor | No |

| Witsy | No |

| Zed | Yes |

Using MCP Prompts with Sampling and Tools

Sampling in MCP allows the server to request LLM completions from the client. First, the server sends a sampling request to the client. Once the client reviews the request, it samples from the LLM and returns the results to the server.

Servers can use tools to make executable functionality available to clients. They allow LLMs to connect with external systems and perform real-world tasks.

MCP prompts are usually used with sampling and tools to enable more intelligent behavior of the AI agent or model. For example, we can define a prompt to “summarize the latest sales report.” The server sends a sampling/createMessage request to the client.

Next, the server calls the tools, allowing the AI model to access the latest sales data from a connected database. Finally, the model provides the summary.

Security Considerations

Prompt injection attacks are a common security issue with MCP. These attacks can occur when MCP servers expose prompts or tool descriptions containing malicious instructions, allowing threat actors to manipulate the behavior of AI models.

Since prompts are usually used with tools, the AI model processes both user inputs and tool descriptions as part of its prompt context. An attacker could create a prompt template that includes hidden malicious instructions, or a tool description could contain malicious instructions. When a user selects such a prompt or tool, the model may execute unintended actions, leading to tool poisoning and prompt injection attacks.

To prevent these issues, validate all prompts and arguments. Sanitizing user input and auditing prompt usage is also crucial. Moreover, validating generated content can also help prevent prompt injection attacks.

Final Thoughts

MCP prompts are basically reusable, structured templates that the server exposes to the client. Since prompts are user-controlled, they can be presented on the application’s UI, allowing the user to easily select their desired prompts.

Dynamic prompts can adjust responses based on user input and context. They can be customized with dynamic arguments or include context from different resources. With MCP prompts, users can control the behavior or responses of the AI model, enabling it to provide more accurate responses.