MCP Use Cases

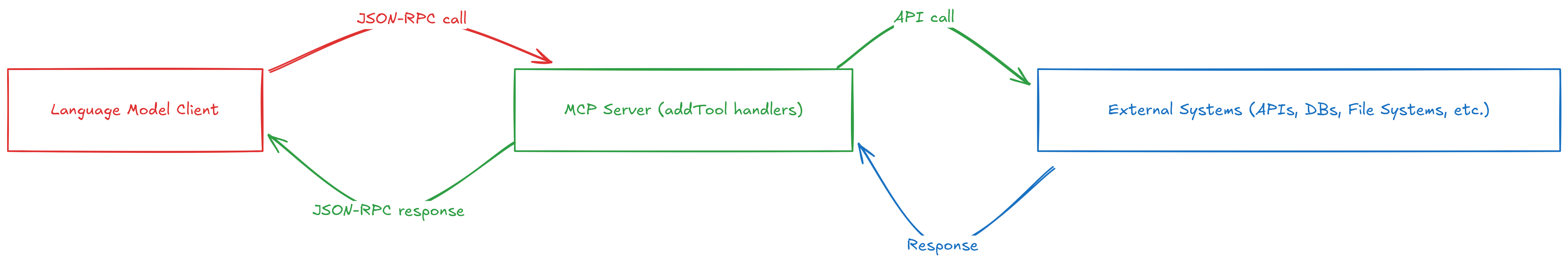

Model Context Protocol (MCP) connects language models to real-world tools and data sources, creating a standardized way for AI applications to interact with external systems. When you develop AI-powered applications that need to do more than generate text, MCP provides the framework for these integrations.

End-to-end MCP flow from LLM client through server to external APIs and back

End-to-end MCP flow from LLM client through server to external APIs and back

Understanding real-world MCP use cases helps developers see beyond theoretical possibilities to practical implementations. These examples show how MCP bridges the gap between AI models and concrete actions in various domains.

GitHub Repository Management

Software teams often need to answer questions about their repositories quickly: "When was this feature added?" or "Who has been working on the authentication module?" The GitHub MCP server turns these natural language questions into API calls, giving AI assistants direct access to your codebase information.

A product manager can ask about recent development activity without learning Git commands. The GitHub MCP server implements this functionality through tools like the repository information fetcher:

// GitHub MCP tool for fetching repository information

server.addTool(

"get_repository",

"Get information about a GitHub repository",

{

type: "object",

properties: {

owner: { type: "string", description: "Repository owner" },

repo: { type: "string", description: "Repository name" }

},

required: ["owner", "repo"]

},

async ({ owner, repo }) => {

const octokit = new Octokit({ auth: process.env.GITHUB_TOKEN });

const { data } = await octokit.rest.repos.get({ owner, repo });

return {

content: [{

type: "text",

text: JSON.stringify({

name: data.name,

description: data.description,

stars: data.stargazers_count,

forks: data.forks_count,

language: data.language,

lastUpdated: data.updated_at

}, null, 2)

}]

};

}

);

The GitHub MCP server functionality extends beyond just reading information. When used with the Goose client, developers can make actual code changes through natural language prompts.

Users can create branches, edit files, and even open pull requests entirely through conversation. For example, a developer might say: "Create a new branch called feature-login in my company/webapp repository, add a file called login.js with basic authentication code, and open a pull request for review." The MCP server handles the entire workflow from branch creation to PR submission.

Complex Problem Solving

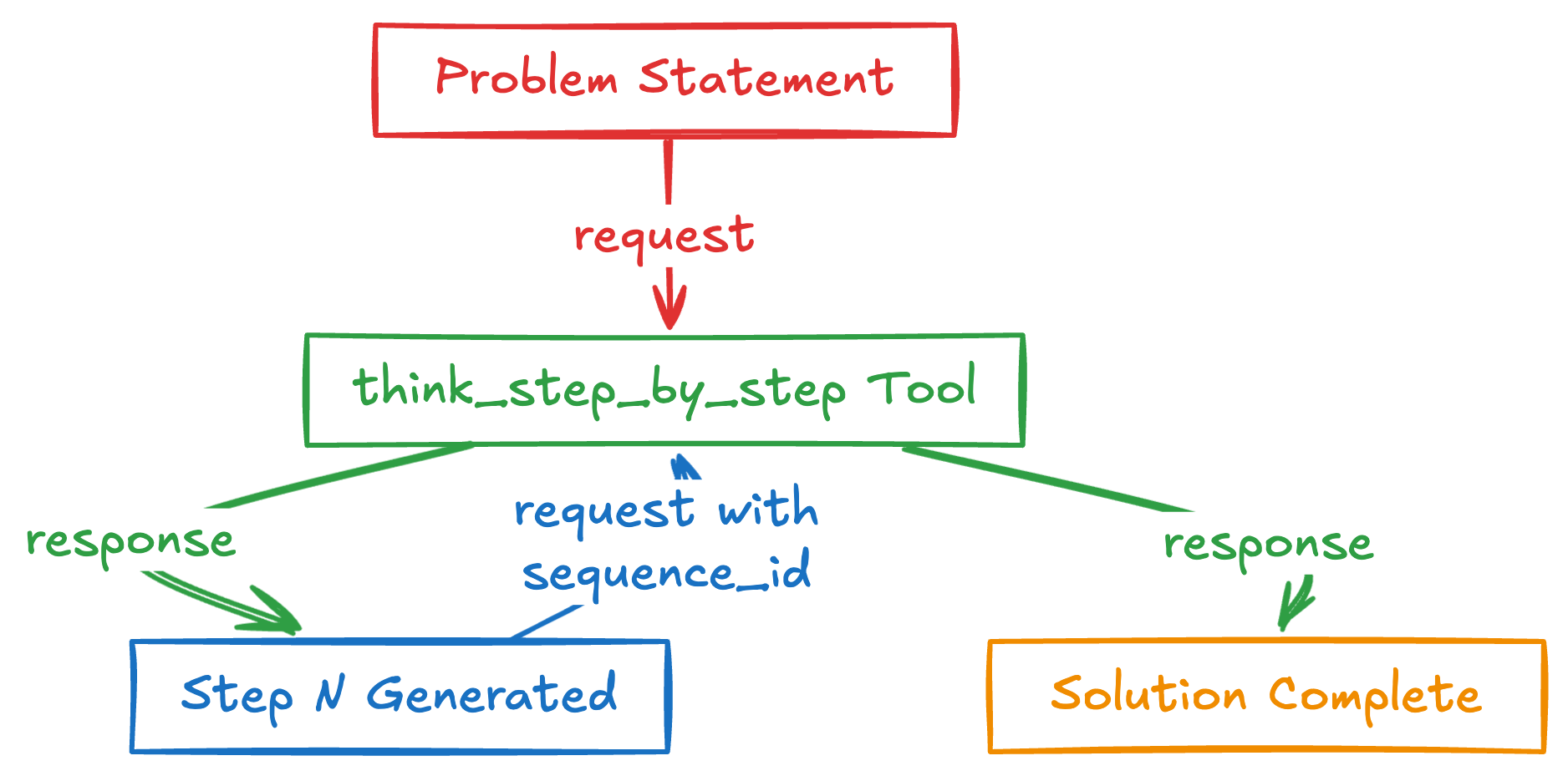

Complex problem-solving often falters when tackled all at once. The Sequential Thinking MCP server helps AI models break down problems into manageable steps. This is perfect for debugging tricky code issues or solving complex logic problems.

Breaking complex problems into iterative steps via the sequential_thinking tool

Breaking complex problems into iterative steps via the sequential_thinking tool

The Sequential Thinking server works by structuring reasoning as a series of explicit steps:

// Sequential Thinking MCP tool (simplified)

server.addTool(

"think_step_by_step",

"Solve a complex problem using sequential reasoning",

{

type: "object",

properties: {

problem: { type: "string", description: "Problem statement to solve" },

sequence_id: {

type: "string",

description: "ID of an existing thought sequence to continue"

}

},

required: ["problem"]

},

async ({ problem, sequence_id }) => {

// Implementation for breaking down problem-solving into steps

// ...

}

);

For mathematical problems specifically, the WolframAlpha MCP server provides a powerful complement to Sequential Thinking. When a user prompts Claude with "Integrate 2x+4 with wolfram," the WolframAlpha MCP handles the computational heavy lifting, allowing Claude to provide precise mathematical answers that would otherwise be prone to errors.

This approach dramatically improves reasoning accuracy by allowing the model to build solutions incrementally rather than attempting to solve everything at once. A developer debugging a complex algorithm can watch the AI work step-by-step, examining each part of the problem in isolation. Similarly, a data scientist can trace through a complex data transformation process without getting lost in the details.

Database Integrations

Companies store critical information in databases that traditional AI models can't access. The PostgreSQL MCP server connects AI assistants directly to your database, allowing them to retrieve exactly the information users need without requiring SQL expertise.

The PostgreSQL MCP server implements tools for querying data:

// PostgreSQL MCP tool for querying data

server.addTool(

"query_database",

"Execute a SQL query against a PostgreSQL database",

{

type: "object",

properties: {

query: { type: "string", description: "SQL query to execute" },

parameters: {

type: "array",

items: { type: "any" },

description: "Query parameters"

},

row_limit: {

type: "number",

description: "Maximum number of rows to return",

default: 100

}

},

required: ["query"]

},

async ({ query, parameters = [], row_limit = 100 }) => {

// Implementation for secure database querying

// ...

}

);

The server includes protection measures to prevent destructive operations and control resource usage. It validates queries against blocklists, enforces execution timeouts, and limits the number of rows returned.

When a sales manager asks "What were our top-selling products last quarter, broken down by region?", Claude handles the translation from natural language to SQL. The PostgreSQL MCP server executes this query securely and returns the results in a structured format that Claude can analyze and explain. This transforms how organizations access their data, making business intelligence available through conversation instead of requiring specialized query knowledge or waiting for reports.

File System Operations

Documents, spreadsheets, and other files often contain valuable information locked away in unstructured formats. The Filesystem MCP server gives AI assistants controlled access to your files, enabling them to read content, analyze documents, and extract relevant information.

The core functionality of the file reading tool is remarkably simple:

// Filesystem MCP tool for reading files

server.addTool(

"read_file",

"Read content from a file",

{

type: "object",

properties: {

path: { type: "string", description: "Path to the file" },

encoding: {

type: "string",

description: "File encoding",

enum: ["utf8", "base64", "hex"],

default: "utf8"

}

},

required: ["path"]

},

async ({ path: filePath, encoding = "utf8" }) => {

// Implementation with security checks and file reading

// ...

}

);

The Filesystem MCP server architecture prioritizes security through path validation, file type restrictions, size limitations, and permission controls. This allows researchers to ask an AI to analyze patterns across multiple research papers stored locally, or financial analysts to request summaries of quarterly reports without manually opening each file.

For specific file formats, the server can include specialized handlers. For instance, a CSV parser helps Claude work with tabular data more effectively. When a user asks Claude to "Find all quarterly reports from 2024 in the reports directory and summarize the revenue trends across product categories," the model uses file system tools to locate, access, and analyze the relevant documents.

Web Searches and Content Extraction

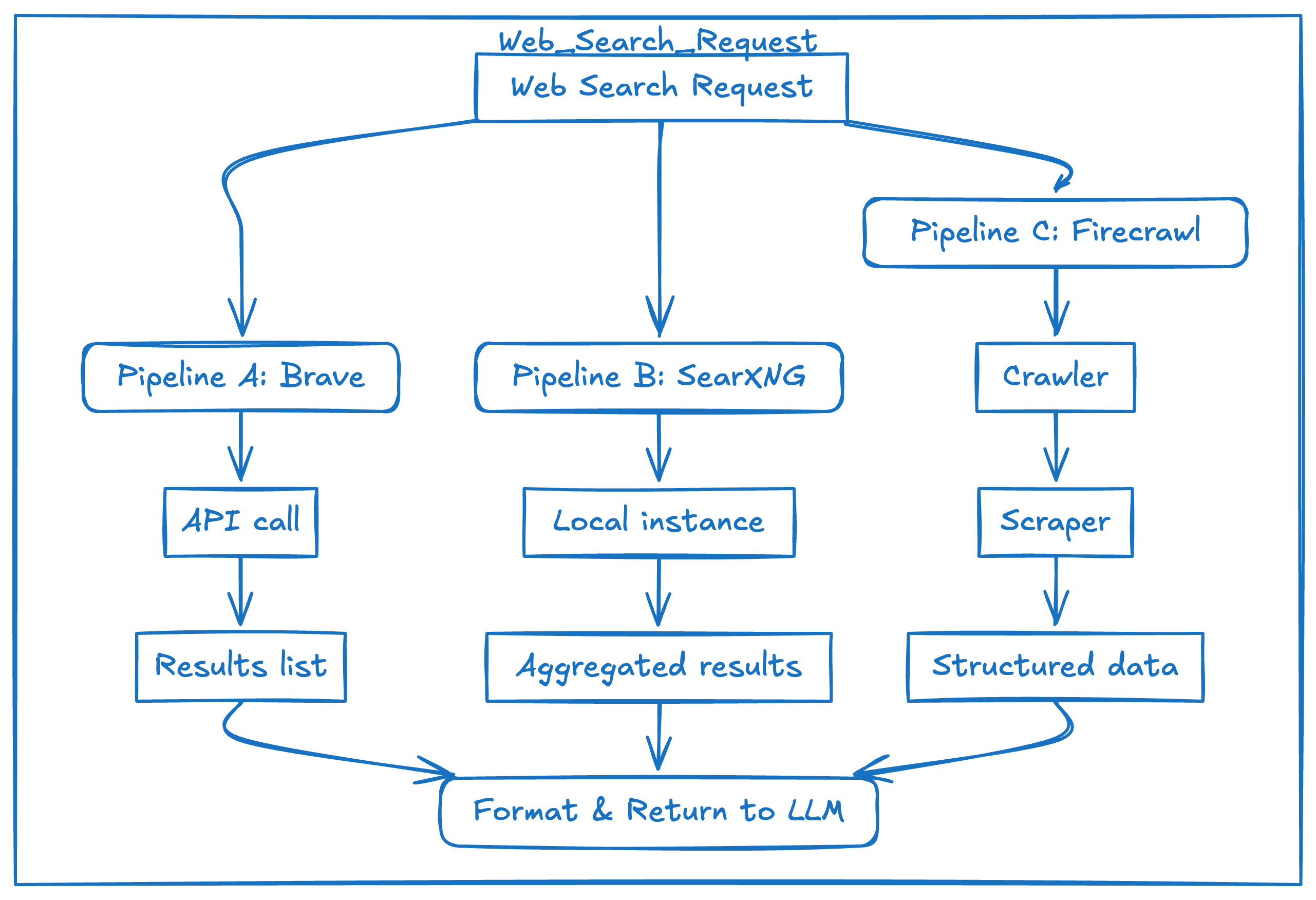

AI models have knowledge cutoffs, limiting their awareness of recent events and developments. Several MCP servers address this limitation through web search capabilities.

The Brave Search MCP server connects Claude to Brave's commercial search API:

// Brave Web Search MCP tool

server.addTool(

"web_search",

"Search the web for information",

{

type: "object",

properties: {

query: { type: "string", description: "Search query" },

count: {

type: "number",

description: "Number of results to return",

default: 5

}

},

required: ["query"]

},

async ({ query, count = 5 }) => {

// Implementation for web search with results formatting

// ...

}

);

For developers seeking free alternatives, the SearXNG MCP server provides a self-hosted search solution that aggregates results from various search engines. This server allows users to run searches across multiple search engines without relying on commercial APIs.

Specialized search services like the Firecrawl MCP server enable deeper web scraping and content extraction. A practical example involves researching doctor reviews - users can prompt: "I am considering seeing these doctors for an ankle injury. Can you please search for detailed information on ratings and reviews for each of them?" The MCP server handles the web crawling, content extraction, and data organization, providing structured information from across the web.

Merging results from Brave, SearXNG, and Firecrawl into a unified LLM response

Merging results from Brave, SearXNG, and Firecrawl into a unified LLM response

These web search capabilities ensure AI assistants remain relevant and accurate when discussing time-sensitive topics, bridging the gap between their training data and current reality.

Image Generation and Manipulation

Creating and editing visual content traditionally requires specialized skills and software. The Stability AI MCP server connects Claude to powerful image manipulation tools.

For background removal, users can simply prompt:

remove-background: from profile_photo.jpg

The MCP server handles the entire process, from retrieving the image to processing it with AI models and saving the result:

// Stability AI MCP tool for background removal

server.addTool(

"remove_background",

"Remove the background from an image",

{

type: "object",

properties: {

image_path: { type: "string", description: "Path to the input image file" },

output_path: { type: "string", description: "Path for the output image" }

},

required: ["image_path"]

},

async ({ image_path, output_path }) => {

// Implementation for background removal using Stability AI

// ...

}

);

The server also supports image generation from text descriptions. When users request "Generate an image of a mountain lake at sunset," the server uses stable diffusion models to create the image and save it to the specified location. This democratizes visual creation, allowing anyone to produce custom imagery that would previously have required specialized skills or expensive resources.

Design-to-Code Conversion

The Figma MCP server bridges the gap between design and implementation. Developers can connect Claude to Figma designs, allowing it to analyze design elements and convert them into functional code.

Users can prompt:

I'd like you to convert this Figma design to code using HTML and Tailwind CSS. The frame I want you to focus on is titled "Card".

The Figma MCP server fetches the design data from Figma, making it available to Claude:

// Figma MCP tool for getting frame information

server.addTool(

"get_figma_frame",

"Get detailed information about a specific frame in a Figma file",

{

type: "object",

properties: {

file_url: { type: "string", description: "URL of the Figma file" },

frame_name: { type: "string", description: "Name of the frame to retrieve" }

},

required: ["file_url", "frame_name"]

},

async ({ file_url, frame_name }) => {

// Implementation for fetching Figma frame data

// ...

}

);

Claude then analyzes the design data and generates the corresponding HTML and CSS code, dramatically accelerating the design implementation process. This seamless bridge between design and development reduces handoff friction and ensures more accurate implementations of design intentions.

Memory for Persistent Knowledge

The inability to retain context across conversations limits many AI applications. The Knowledge Graph Memory server provides persistent storage for information, enabling AI assistants to remember past interactions and build personalized knowledge bases.

The memory storage tool demonstrates the elegant simplicity of MCP:

// Memory MCP tool for storing information

server.addTool(

"store_memory",

"Store information in memory",

{

type: "object",

properties: {

content: { type: "string", description: "Content to remember" },

tags: {

type: "array",

items: { type: "string" },

description: "Tags to categorize this memory"

}

},

required: ["content"]

},

async ({ content, tags = [] }) => {

// Implementation for semantic memory storage

// ...

}

);

A customer service AI can recall a customer's previous issues without asking them to repeat information. A research assistant can build up a knowledge base of a user's interests and preferences over time. This transforms single interactions into ongoing relationships, making AI assistants feel more helpful and personalized as they accumulate knowledge about specific users and their needs.

Flight Search and Booking

The Flights MCP server connects Claude to the Duffel API, enabling comprehensive flight search capabilities. Users can make natural language requests:

I'm looking for flights from San Francisco to Tokyo between May 20-25, preferably in premium economy, arriving before noon.

The MCP server translates these natural requests into structured API calls, allowing Claude to search across airlines, dates, and routes to find optimal options. Users can compare prices across dates, analyze route options, and get detailed information about specific flights including cabin features and bag policies.

This integration transforms the flight search experience from filling out structured forms to having a natural conversation about travel plans. The AI handles the complexity of finding and filtering options, presenting only the most relevant results based on the user's stated preferences.

Time and Timezone Management

Time calculations and timezone conversions are surprisingly complex. The Time MCP server provides tools for working with dates, times, and timezones, enabling AI assistants to handle temporal queries precisely.

The timezone conversion tool demonstrates this capability:

// Time MCP tool for converting between timezones

server.addTool(

"convert_time",

"Convert time between timezones",

{

type: "object",

properties: {

source_timezone: {

type: "string",

description: "IANA name of the source timezone (e.g. 'America/New_York')"

},

time: {

type: "string",

description: "Time to convert (24-hour format, e.g. '16:30')"

},

target_timezone: {

type: "string",

description: "IANA name of the target timezone (e.g. 'Asia/Tokyo')"

}

},

required: ["source_timezone", "time", "target_timezone"]

},

async ({ source_timezone, time, target_timezone }) => {

// Implementation for timezone conversion

// ...

}

);

A global team coordinator can easily plan meetings across multiple timezones. A project manager can calculate accurate delivery dates accounting for holidays and working days. A travel planner can help users understand time differences at their destinations. This removes the complexity of time calculations from users, letting them express temporal questions naturally and receive accurate, usable answers.

Google Drive Integration

Document management demands context-aware assistance. The Google Drive MCP server connects AI to your documents, enabling intelligent search, analysis, and creation.

The Google Drive search tool showcases this integration:

// Google Drive MCP tool for searching files

server.addTool(

"search",

"Search for files in Google Drive",

{

type: "object",

properties: {

query: { type: "string", description: "Search query" }

},

required: ["query"]

},

async ({ query }) => {

// Implementation for Google Drive search

// ...

}

);

A manager can find all budget-related documents from the past year without remembering exact filenames. A team member can request summaries of lengthy documents without opening each one. A content creator can have AI analyze engagement metrics across multiple documents to identify successful patterns. This transforms document management from manual navigation to conversation-based retrieval, making information more accessible regardless of how it's organized or where it's stored.

Slack Integration

Team communication often suffers from information silos. The Slack MCP server enables AI to participate in workplace communications, sharing information and coordinating across channels.

The essential code for the Slack messaging tool is remarkably concise:

// Slack MCP tool for posting a message

server.addTool(

"slack_post_message",

"Post a new message to a Slack channel",

{

type: "object",

properties: {

channel_id: { type: "string", description: "The ID of the channel to post to" },

text: { type: "string", description: "The message text to post" }

},

required: ["channel_id", "text"]

},

async ({ channel_id, text }) => {

// call Slack’s chat.postMessage with { channel: channel_id, text }

// …

}

);

A project update can be automatically summarized and shared with stakeholders. Key information from a technical discussion can be extracted and documented. Team members can be notified about relevant developments without manual message forwarding.

This integration streamlines information sharing across teams, ensuring insights and updates reach the right people at the right time without manual copy-pasting or channel switching.

Conclusion

MCP transforms what AI can do by connecting language models to real-world systems. These practical use cases show how MCP bridges the gap between AI's language capabilities and actual tools, databases, and services.

The pattern for building MCP servers remains consistent: identify areas where AI assistants could deliver value if they had access to specific tools or data sources, then implement those connections using the standardized MCP approach. The implementation typically involves defining the tool's parameters, creating the function to handle requests, and integrating with external services or APIs.

As developers create more MCP servers, the capabilities of AI assistants continue to expand. These connections between AI and external systems enable increasingly powerful applications that combine natural language understanding with concrete, real-world actions. The result is AI systems that not only understand what users want, but can actually help them accomplish their goals through direct interaction with the tools and data sources they use every day.